Now that the dust has settled a bit since Nutanix’s .Next annual conference and I’ve had some time to reflect on my time in New Orleans, let’s burn a few minutes of eye time on pulling those thoughts out of my brain and put it in writing, after all that is what a blog is for right? According to the age of my blog and the utter lack of number of blog posts I’ve done so far…. I either have an abundance of space in my head or a slow leak somewhere. I’m going with the slow leak, you can decide for yourself… now onto why you are really here!

Now that the dust has settled a bit since Nutanix’s .Next annual conference and I’ve had some time to reflect on my time in New Orleans, let’s burn a few minutes of eye time on pulling those thoughts out of my brain and put it in writing, after all that is what a blog is for right? According to the age of my blog and the utter lack of number of blog posts I’ve done so far…. I either have an abundance of space in my head or a slow leak somewhere. I’m going with the slow leak, you can decide for yourself… now onto why you are really here!

I won’t hit on everything announced, but let’s go with a few that stuck out to me..

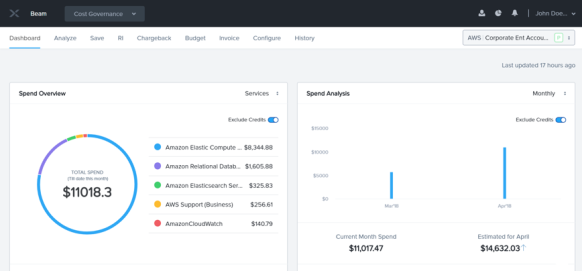

Beam

Early this year I spent a few days taking an Amazon AWS course in Cambridge and I came to MANY conclusions during that class but one that kept coming up among the crowd of both customers and pre-sales techie types is how unknown their monthly AWS cloud costs will end up actually costing them. Until they actually get that bill, it’s somewhat of a guessing game as there is so many different individual fees, etc. that all add up. I like to equate this to getting a hospital bill. You know you need all these services, medicines, and every individual object touched down to the tissues you sneeze and ones you don’t remember are on your invoice but you don’t ever have a clue what the bottom-line is going to look like until you have it. At least for AWS (and others soon!) there’s now Beam. Beam shines a light (tada, name… Beam) into your cloud costs to help you manage them and provide governance around them across providers. This product is actually something you can subscribe to immediately and is also technically the first SaaS offering from Nutanix as they expand beyond HCI infrastructure. I’m excited to see where this product will be going as more providers are added and it gets integrated into the Nutanix portfolio. Now if only someone could do the same for my healthcare bills…. See Nutanix’s blog post about it and where I “borrowed” the below image.

Flow

Security is king these days. It’s no longer just OK to protect your infrastructure at the edge and in a north-south fashion. There is a trend happening where network security teams are now looking to protect data east-west, so from VM to VM, or application to application. The advent of micro-segmentation partially stems from increased data threats due to malware. Cordoning off VMs from one another will prevent malware from spreading inside a network VM to VM. The problem until now was that SDS networking solutions have been very complex to stand up, maintain, and tend to be extremely professional services heavy. Introducing flow…. It’s all built directly into the Nutanix software stack and just needs to be enabled. No more lengthy, time consuming engagements or additional infrastructure VMs, etc. need to be deployed. Just license, enable, and start micro segmenting. Nutanix’s story of simplicity continues even into software defined networking. Amazing. Historically I’ve seen many customers never quite get to going down this route, mainly due to complexity of management, and yes of course costs, but when it’s made to be so easy to deploy, I can see adoption skyrocketing: Official Nutanix blog post on Flow for reference.

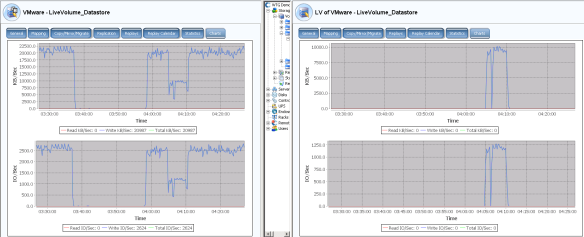

X-Ray: Open Sourced

Lastly, Nutanix has made a move to open source it’s X-Ray tool. A couple months ago I got to get some hands on time with it for the first time and was really impressed at where it is today and look forward to using it more in the future. X-Ray was designed by Nutanix as a tool to test HCI platforms against real-world scenarios. Initially intended for their own platform, they have been adding additional hypervisors, HCI hardware platforms, and additional testing scenarios since it’s release. Although it was designed using standard testing tools, being open source now exposes it’s neutrality to the masses and another great side effect of this is that it opens up the tool for further development of both test plans and other HCI platforms. I for one think this was a great idea, taking what could be just a pre-sales engagement tool and opening it up for anyone to use to validate either their existing infrastructure or even other gear that is in a POC/testing phase. Traditional legacy testing tools just don’t cut it anymore in the world of HCI, so I’m glad to see some movement to modernize testing scenarios for real world application and am happy to see Nutanix leading that cause. More info on this can be found on Nutanix’s blog.

That’s all I got for now. Let’s hope this reinvigoration of my blog continues. Until next time!

You can find all of the linked blog posts (and others!) on Nutanix’s main blog site here: https://www.nutanix.com/blog/

SCv2000 – Base 2U array with up to 12 3.5” drives

SCv2000 – Base 2U array with up to 12 3.5” drives

the front and 2 x 2.5” in the back of the chassis. This is a very unique configuration that when combined with either SanDisk DAS Cache, VMware VSAN, or even possibly PernixData (not sure if this has been tested yet, but I will volunteer here), would be great solutions that can provide high IOPS on top of dense storage with the integrated caching software provided by each of these vendors.

the front and 2 x 2.5” in the back of the chassis. This is a very unique configuration that when combined with either SanDisk DAS Cache, VMware VSAN, or even possibly PernixData (not sure if this has been tested yet, but I will volunteer here), would be great solutions that can provide high IOPS on top of dense storage with the integrated caching software provided by each of these vendors.